Categories

Archives

The IPTC Photo Metadata Working Group is proud to announce the IPTC Photo Metadata Conference 2022. The event will be held online on Thursday November 10th from 15.00 – 18.00 UTC.

This year the theme is Photo Metadata in the Real World. After introducing two new developments last year: the IPTC Accessibility properties and the C2PA specification for embedding provenance data in photo and video content – we re-visit both technologies to see how they are being adopted by software systems, publishers and broadcasters around the world.

The 3-hour meeting will host four sessions:

- Adoption of the IPTC Accessibility Properties – we hear from vendors and content creators on how they are progressing in implementing the new properties to support accessibility

- Software Supporting the IPTC Photo Metadata Standard – showcasing an update to IPTC’s directory of software supporting the IPTC Photo Metadata Standard, including field-by-field reference tables letting users compare software implementations

- Use of C2PA in real workflows – showcasing early work on implementing the C2PA specification in media organisations

- Artificial Intelligence and metadata – looking at the questions around copyright and synthetic media: for example, when generative AI uses thousands of potentially copyrighted images to train machine learning models, who owns the resulting images?

We look forward to welcoming all interested parties to the conference – no IPTC membership is needed to attend. The event will be held as a Zoom webinar.

Please see more information and the Zoom registration link on the event page.

See you there on the November 10th!

Last week the IPTC held its Autumn Meeting 2022, with over 70 attendees from over 20 countries attending the three-day online event.

Discussions were as wide-ranging as ever. Highlights were a guest presentation on how media organisations can prepare for the Metaverse from startup advisor and previous member of the Microsoft HoloLens team, Toby Allen; intense discussions from members about making our work in machine-readable rights and RightsML simpler and more accessible and to bridge the gap between our simple, lightweight JSON news standard ninjs and our richly structured full-featured XML-based standard NewsML-G2.

We also heard about many other topics:

- Meinolf Ellers at IPTC member dpa spoke about the DRIVE initiative, which follows on from the C-POP project that IPTC advised on in 2019 and 2020. DRIVE allows consortium members to share data about content usage to drive subscriptions and engagement, and to find under-represented areas in their news output to meet audience needs.

- We heard about representing social media content in NewsML-G2: Dave Compton of Refinitiv spoke about their work encoding content from Twitter and other social networks in NewsML-G2 format for re-use, enhancement and syndication.

- Will Kreth, previously CEO of EIDR, spoke about the HAND project which aims to create a unique identifier for media and sports talent

- Fredrik Lundberg from IPTC member iMatrics and guest presenter Jens Pehrson from GOTA Media spoke about a new tool they have developed that allows publishers to track the gender balance in their news content

- Johan Lindgren from IPTC member TT (the Swedish national news agency) spoke about their recent project to develop a classification and entity extraction engine for their news content, based on IPTC Media Topics taxonomy

- We heard from Audren Layeux of CARSA who spoke about the European Media Data Space project, an EU initiative

- Ben Colman, CEO of RealityDefender spoke (direct from TechCrunch Disrupt in San Francisco!) about their deepfake detection technology, used by social media networks, financial institutions and media organisations to detect manipulated images and videos.

- IPTC MD Brendan Quinn spoke about IPTC’s ongoing work with C2PA and Project Origin, including forthcoming additions to C2PA to include video metadata.

In addition, we heard updates from all IPTC Working Groups: Dave Compton introduced NewsML-G2 2.31; Paul Kelly spoke about some new developments in the RDF-based sports data model which will be announced soon; Pam Fisher described the work of the Video Metadata Working Group and the changes coming in Video Metadata Hub v1.4; David Riecks and Michael Steidl spoke about Photo Metadata Standard 2022.1 and the ongoing work of the Photo Metadata Working Group;

The Standards Committee voted in new standard versions: NewsML-G2 v2.31, Video Metadata Hub v1.4, and Photo Metadata Standard 2022.1. These will be released and publicised over the coming weeks.

The IPTC Annual General Meeting 2022 saw Johan Lindgren step down from the Board of Directors after 6 years of service. Thanks very much for all your help, Johan!

We are very happy to welcome a new Board member: Heather Edwards of Associated Press.

Thanks very much to everyone who attended and spoke. You contributed to making it a great event for all!

As usual, full recordings of all sessions are available to IPTC members on the members-only event page.

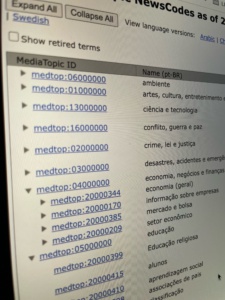

As is now traditional, the IPTC NewsCodes Working Group has released our regular update at the end of the calendar quarter.

This release includes updates to the Media Topic and Item Relation CVs.

Changes to the Media Topic vocabulary

Label and/or definition changes:

- medtop:20001304 sports award -> sports honour (definition also changed)

- medtop:20001303 sports medal -> sports medal and trophy (definition also changed)

- medtop:20001302 sports record (definition changed)

- medtop:20001104 drug use in sport (definition changed)

Retired terms:

- medtop:20001105 drug abuse in sport: RETIRED

- medtop:20001106 drug testing in sport: RETIRED

- medtop:20001107 medical drug use in sport: RETIRED

Hierarchy moves:

- medtop:20001338 education policy: moved from medtop:05000000 education to medtop:20000621 government policy. This change was suggested by ABC Australia – thanks very much!

New terms:

- medtop:20001360 fraternal and community group (child of medtop:20000768 communities)

- medtop:20001361 cyber warfare (child of medtop:16000000 conflict, war and peace)

- medtop:20001362 public transport (child of medtop:20000337 transport) – suggested by NTB Norway

- medtop:20001363 taxi and ride-hailing (child of medtop:20000337 transport)

- medtop:20001364 shared transport (child of medtop:20000337 transport)

The release also includes no-NN (New Norwegian) translations for the updates released in Q2 2022. Other languages were already updated over previous months.

Changes to other Controlled Vocabularies

The itemrelation CV is used in NewsML-G2 to show types of links between news items. The vocabulary now has two new terms:

- irel:translatedFromRoot: “The related resource contains the content from which this item was translated, either directly or indirectly via one or more other translations”

- irel:wasPackagedIn: “Indicates that this Item was included in the target package”

Thanks to everyone from IPTC members and users of the NewsCodes CV for suggesting terms, and to the NewsCodes and Sports Content Working Groups who helped to put this release together.

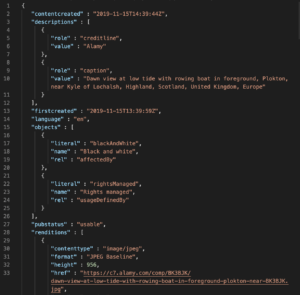

Alamy, a stock photo agency offering a collection of over 300 million images along with millions of videos, has recently launched a new Partnerships API, and has chosen IPTC’s ninjs 2.0 standard as the main format behind the API.

Alamy, a stock photo agency offering a collection of over 300 million images along with millions of videos, has recently launched a new Partnerships API, and has chosen IPTC’s ninjs 2.0 standard as the main format behind the API.

Alamy is an IPTC member via its parent company PA Media, and Alamy staff have contributed to the development of ninjs in recent years, leading to the introduction of ninjs 2.0 in 2021.

“When looking at a response format, we sought to adopt an industry standard which would aid in the communication of the structure of the responses but also ease integration with partners who may already be familiar with the standard,” said Ian Young, Solutions Architect at Alamy.

“With this in mind, we chose IPTCs news in JSON format, ninjs,” he said. “We selected version 2 specifically due to its structural improvements over version 1 as well as its support for rights expressions.”

Young continued: “ninjs allows us to convey the metadata for our content, links to the media itself and the various supporting renditions as well as conveying machine readable rights in a concise payload.”

“We’ve integrated with customers who are both familiar with IPTC standards and those who are not, and each have found the API equally easy to work with.”

Learn more about ninjs via IPTC’s ninjs overview pages, consult the ninjs User Guide, or try it out yourself using the ninjs generator tool.

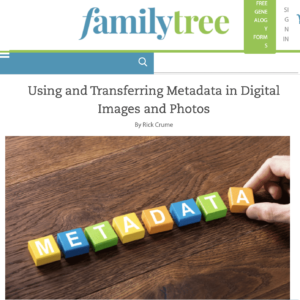

Family Tree magazine has published a guide on using embedded metadata for photographs in genealogy – the study of family history.

Rick Crume, a genealogy consultant and the article’s author, says IPTC metadata “can be extremely useful for savvy archivists […] IPTC standards can help future-proof your metadata. That data becomes part of the digital photo, contained inside the file and preserved for future software programs.”

Crume quotes Ken Watson from All About Digital Photos saying “[IPTC] is an internationally recognized standard, so your IPTC/XMP data will be viewable by someone 50 or 100 years from now. The same cannot be said for programs that use some proprietary labelling schemes.”

Crume then adds: “To put it another way: If you use photo software that abides by the IPTC/XMP standard, your labels and descriptive tags (keywords) should be readable by other programs that also follow the standard. For a list of photo software that supports IPTC Photo Metadata, visit the IPTC’s website.“

“[IPTC] is an internationally recognized standard, so your IPTC/XMP data will be viewable by someone 50 or 100 years from now”

The article goes on to recommend particular software choices based on IPTC’s list of photo software that supports IPTC Photo Metadata. In particular, Crume recommends that users don’t switch from Picasa to Google Photos, because Google Photos does not support IPTC Photo Metadata in the same way. Instead, he recommends that users stick with Picasa for as long as possible, and then choose another photo management tool from the supported software list.

Similarly, Crume recommends that users should not move from Windows Photo Gallery to the Windows 10 Photos app, because the Photos app does not support IPTC embedded metadata.

Crume then goes on to investigate popular genealogy sites to examine their support for embedded metadata, something that we do not cover in our photo metadata support surveys.

The full article can be found on FamilyTree.com.

Following on with our quarterly update cycle, the IPTC NewsCodes Working Group has released the Q2 2022 update of IPTC NewsCodes, including updates to the Media Topic, Subject Code, and Digital Source Type vocabularies.

Media Topic updates

- Translation changes:

- A new language translation for “New Norwegian” (Norwegian nynorsk, no-NN) has been added to all labels. The existing Norwegian labels previously tagged with “no” are now tagged as “no-NB” for Norwegian bokmål. Thanks very much to NTB for providing the update.

- Label and definition changes:

- medtop:20000446 diseases and conditions

- medtop:20001230 corporate social responsibility -> environmental, social and governance policy (ESG)

- medtop:20000449 epidemic -> epidemic and pandemic

- medtop:20000451 virus disease -> viral disease

- medtop:20000452 AIDS -> HIV and AIDS

- medtop:20000457 medical conditions -> medical condition

- medtop:20000458 mental health and disorder

- medtop:20000463 health organisation

- medtop:20000464 health treatment -> health treatment and procedure

- medtop:20000466 dietary supplement

- medtop:20000467 medical drugs -> non-prescription drug

- medtop:20000468 prescription drugs -> prescription drug

- medtop:20000469 medical procedure/test -> medical test

- medtop:20000470 medicine -> health care approach

- medtop:20000474 western medicine -> conventional medicine

- medtop:20000480 government health care

- medtop:20001225 ophthalmology -> eye care

- Definition changes:

- medtop:07000000 health

- medtop:20000784 family planning

- medtop:20000454 heart disease

- medtop:20000456 injury

- medtop:20000461 health facility

- medtop:20000465 diet

- medtop:20001219 drug rehabilitation

- medtop:20001221 emergency care

- medtop:20000471 herbal medicine

- medtop:20000472 holistic medicine

- medtop:20000473 traditional Chinese medicine

- medtop:20000479 healthcare policy

- medtop:20000483 health insurance

- medtop:20000484 private health care

- medtop:20000486 medical service

- medtop:20000490 paediatrics

- Hierarchy moves:

- medtop:20000500 animal moves to become a child of medtop:20000441 nature

- medtop:20000507 flowers and plants moves to become a child of medtop:20000441 nature

- medtop:20001318 pests moves to become a child of medtop:20000500 animal

- medtop:20000494 animal disease moves to become a child of medtop:20000500 animal

- medtop:20000495 plant disease moves to become a child of medtop:20000507 flowers and plants

- medtop:20000460 obesity becomes a child of medtop:20000457 medical condition

- medtop:20000477 vaccine becomes a child of medtop:20000464 health treatment and procedure

- New terms:

- medtop:20001355 developmental disorder

- medtop:20001356 depression

- medtop:20001357 anxiety and stress

- medtop:20001358 public health

- medtop:20001359 pregnancy and childbirth

- Retired terms:

- medtop:20001218 pandemic (use the new “epidemic and pandemic” term instead)

- medtop:20000450 plague (disease)

- medtop:20000453 retrovirus

- medtop:20000455 illness

- medtop:20000475 physical fitness

- medtop:20000476 preventative medicine

- medtop:20001220 general practice

- medtop:20000488 geriatric medicine

- medtop:20000489 obstetrics/gynaecology

- medtop:20001223 oncology

- medtop:20001222 orthopaedics

- medtop:20000713 pharmacology

- medtop:20001227 psychiatry

- medtop:20001224 radiology

- medtop:20000491 reproductive medicine

- medtop:20001226 surgical medicine

- medtop:20000493 non-human diseases

In a related tool update announcement, we have now added a handy “show retired terms” checkbox to the Media Topics interactive tree browser tool, and we default to only showing the active (non-retired) terms. The new option can be seen in the picture at the top of this article.

Digital Source Type vocabulary updates

After asking for feedback on a draft of the work a few months ago, we have updated the Digital Source Type vocabulary to support the emerging area of “Synthetic Media.”

The single term “softwareImage” has been retired, which means that while it is acceptable in legacy content, we no longer recommend its use. The term is now replaced with 9 new terms covering the spectrum from purely human creation through to purely machine image creation:

- Original media with minor human edits

- Composite of captured elements

- Algorithmically-enhanced media

- Data-driven media

- Digital art

- Virtual recording

- Composite including synthetic elements

- Trained algorithmic media

- Pure algorithmic media

- RETIRED: Created by software

To see more detail including the definition of each term, click the links above or view the entire IPTC Digital Source Type vocabulary.

Thanks to those both inside and outside of the IPTC community who gave feedback on our original proposal, your comments were very much appreciated.

Subject Code vocabulary updates – indicating its deprecated status

The IPTC Subject Code vocabulary was created over twenty years ago, in the year 2000. It was maintained through to 2010, but at that point the Media Topic vocabulary took over as IPTC’s preferred subject classification taxonomy. We will keep it on our vocabulary server, but we no longer recommend its use in projects due to some terms being out of date.

So we have put warnings on the pages of the Subject Code vocabulary that indicate its deprecated nature, and encourage users to look at Media Topic instead.

As always, the Media Topics vocabularies can be viewed in the following ways:

- In a collapsible tree view

- As a downloadable Excel spreadsheet

- On one page on the cv.iptc.org server

- In machine readable formats such as RDF/XML and Turtle using the SKOS vocabulary format: see the cv.iptc.org guidelines document for more detail.

For more information on IPTC NewsCodes in general, please see the IPTC NewsCodes Guidelines.

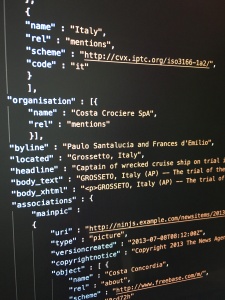

At the IPTC Spring Meeting in May 2022, IPTC’s Standards Committee voted to approve ninjs 1.4, the latest version in the 1.x track of IPTC’s standard for news content in the JSON format.

At the IPTC Spring Meeting in May 2022, IPTC’s Standards Committee voted to approve ninjs 1.4, the latest version in the 1.x track of IPTC’s standard for news content in the JSON format.

Johan Lindgren of TT Nyhetsbyrån, Lead of the IPTC News in JSON Working Group, said:

“After the launch of ninjs 2.0 in the autumn of 2021, we received requests to add some of the new 2.0 features to the first generation of ninjs, so that those who are using the 1.x branch of ninjs can use the new features without making breaking changes. So we are excited to publish version 1.4 of ninjs, where these features are included.”

Those changes include:

- New property contentcreated, denoting the date and time when the content of this ninjs object was originally created (as opposed to the date and time when the ninjs object itself was created). For example, an old photo that is now handled as a ninjs object may have a firstcreated and versioncreated of “2022-06-02T12:00:00+00:00”, but a contentcreated value of “1933-04-03T00:00:00+00:00”. The contents must be a valid JSON Schema date-time object.

- New property expires, showing “the date and time after which the Item is no longer considered editorially relevant by its provider.” Note that this is not the same as a rights-related expiration, it simply conveys the desire of the content creator to highlight the content until a certain time. A good example might be a football match preview, which would no longer be editorially relevant after the game commences. The contents must be a valid JSON Schema date-time object.

- New property rightsinfo, which holds an expression of rights to be applied to the content. It contains sub-properties langid (a URI which specifies the language used to specify rights such as RightsML or ODRL), and one of either linkedrights (containing a link to a remotely-hosted declaration of the rights associated with the content) or encodedrights (which includes an embedded encoding of the rights statements within the ninjs object).

Which version of ninjs should I choose for my project?

There might be some confusion since we have released ninjs 1.4 after the release of ninjs 2.0. Please note that this is simply an update to the 1.x branch of ninjs to make it easier for users who cannot upgrade to 2.x branch due to breaking changes.

If you are starting a new project that requires JSON-encoded news content, we recommend using ninjs 2.0. This version should be easiest for developers to work with.

If you are already using a 1.x version of ninjs, we recommend at least upgrading to version 1.4. This should be an easy change, because 1.4 is backwards-compatible with versions 1.0, 1.1, 1.2 and 1.3. We would also recommend upgrading to 2.0 if possible, but if not, 1.4 is the best version of the 1.x branch.

Supporting materials for ninjs 1.4 and ninjs 2.0 can be found at these locations:

- JSON Schema for ninjs 1.4: https://iptc.org/std/ninjs/ninjs-schema_1.4.json

- JSON Schema for ninjs 2.0: https://iptc.org/std/ninjs/ninjs-schema_2.0.json

- User Guide for ninjs (covering version 2.0 but mentioning 1.4): https://iptc.org/std/ninjs/userguide/

- The ninjs Generator has been updated to output 1.4-compatible JSON: https://www.iptc.org/std/ninjs/generator/

Thanks to Johan and the IPTC News in JSON Working Group for working on this release.

The IPTC took part in a panel on Diversity and Inclusion at the CEPIC Congress 2022, the picture industry’s annual get-together, held this year in Mallorca Spain.

Google’s Anna Dickson hosted the panel, which also included Debbie Grossman of Adobe Stock, Christina Vaughan of ImageSource and Cultura, and photographer Ayo Banton.

Unfortunately Abhi Chaudhuri of Google couldn’t attend due to Covid, but Anna presented his material on Google’s new work surfacing skin tone in Google image search results.

Brendan Quinn, IPTC Managing Director participated on behalf of the IPTC Photo Metadata Working Group, who put together the Photo Metadata Standard including the new properties covering accessibility for visually impaired people: Alt Text (Accessibility) and Extended Description (Accessibility).

Brendan also discussed IPTC’s other Photo Metadata properties concerning diversity, including the Additional Model Information which can include material on “ethnicity and other facets of the model(s) in a model-released image”, and the characteristics sub-property of the Person Shown in the Image with Details property which can be used to enter “a property or trait of the person by selecting a term from a Controlled Vocabulary.”

Some interesting conversations ensued around the difficulty of keeping diversity information up to date in an ever-changing world of diversity language, the pros and cons of using controlled vocabularies (pre-selected word lists) to cover diversity information, and the differences in covering identity and diversity information on a self-reported basis versus reporting by the photographer, photo agency or customer.

It’s a fascinating area and we hope to be able to support the photographic industry’s push forward with concrete work that can be implemented at all types of photographic organisations to make the benefits of photography accessible for as many people as possible, regardless of their cultural, racial, sexual or disability identity.

Where else can you hear about the difficulties of examining photo metadata in NFTs, see a lifelike image of a human being generated from pure data before your eyes, see how Wikidata can be used to take semantic fingerprints of news articles, and discover that an hour is nowhere near long enough to discuss simplifying machine-readable rights? Nowhere but the IPTC Meeting, of course! And this year’s Spring Meeting was the venue for all of this and much more.

We held the meeting virtually from Monday May 16 to Wednesday May 18th, and attending were over 70 people from at least 45 organisations across more than 20 countries.

Along with our usual Working Group updates and committee meetings, we invited speakers from several fascinating startups, services and projects at member companies. Here’s a quick summary of their sessions:

- We heard from Kairntech who are working on a classification system based on extracting entities from news stories and building a “semantic fingerprint” which can be used for cross-language classification, search and content enhancement

- The New York Times’ R&D Lab presented PaperTrail, a project to enhance the quality of the Times’ print archive through the use of machine learning to improve on basic OCR techniques (they’re looking for collaborators, more info coming soon!)

- Bria.ai showed us how an API can be used to enhance and create images and videos through the use of a custom GAN model trained in a “responsible AI” method

- Margaret Warren talked us through her efforts in creating and selling an NFT, looking at the process view the perspective of a photo metadata expert

- Consultant and author Henrik de Gyor talked us through the latest in synthetic media, which will be helpful in helping us to finalise our Digital Source Type vocabulary for synthetic media

- Laurent Le Meur from EDRLab presented his project’s recommendation on a Text and Data Mining Reservation Protocol, which can be used by publishers to restrict the rights of data miners in scraping any content for the purpose of analysis or building a model

- We heard from Dominic Young of Axate on his approach to offer pay-as-you-go payment options on paywalled news sites based on a simple pre-paid wallet mechanism.

We also had many announcements and discussions around IPTC standards, many of which we will be revealing in the coming months. One notable update is that the Standards Committee approved ninjs version 1.4 which we will release soon.

Thanks to all the IPTC members, Working Group leads, committee members and guests who made this member meeting one to remember.

The National Association of Broadcasters (NAB) Show wrapped up its first face-to-face event in three years last week in Las Vegas. In spite of the name, this is an internationally attended trade conference and exhibition showcasing equipment, software and services for film and video production, management and distribution. There were 52,000 attendees, down from a typical 90-100k, with some reduction in booth density; overall the show was reminiscent of pre-COVID days. A few members of IPTC met while there: Mark Milstein (vAIsual), Alison Sullivan (MGM Resorts), Phil Avner (Associated Press) and Pam Fisher (The Media Institute). Kudos to Phil for working, showcasing ENPS on the AP stand, while others walked the exhibition stands.

NAB is a long-running event and several large vendors have large ‘anchor’ booths. Some such as Panasonic and Adobe reduced their normal NAB booth size, while Blackmagic had their normal ‘city block’-sized presence, teeming with traffic. In some ways the reduced booth density was ideal for visitors: plenty of tables and chairs populated the open areas making more meeting and refreshment space available. The NAB exhibition is substantially more widely attended than the conference, and this year several theatres were provided on the show floor for sessions any ‘exhibits only’ attendee could watch. Some content is now available here: https://nabshow.com/2022/videos-on-demand/

For the most part this was a show of ‘consolidation’ rather than ‘innovation’. For example, exhibitors were enjoying welcoming their partners and customers face-to-face rather than launching significant new products. Codecs standardised during the past several years were finally reaching mainstream support, with AV1, VP9 and HEVC well-represented across vendors. SVT-AV1 (Scalable Vector Technology) was particularly prevalent, having been well optimised and made available to use license-free by the standard’s contributors. VVC (Versatile Video Coding), a more recent and more advanced standard, is still too computationally intensive for commercial use, though a small set made mention of it on their stands (e.g. Fraunhofer).

IP is now fairly ubiquitous within broadcast ecosystems. To consolidate further, an IP Showcase booth illustrating support across standards bodies and professional organisations championed more sophisticated adoption. A pyramid graphic showing a cascade of ‘widely available’ to ‘rarely available’ sub-systems encouraged deeper adoption.

Super Resolution – raising the game for video upscaling

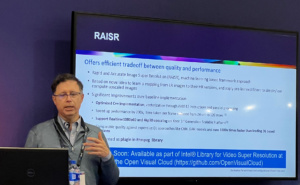

One of the show floor sessions – “Improving Video Quality with AI” – presented advances by iSIZE and Intel. The Intel technology may be particularly interesting to IPTC members, and concerns “Super Resolution.” Having followed the subject for over 20 years, for me this was a personal highlight of the show.

Super Resolution is a technique for creating higher resolution content from smaller originals. For example, achieving a professional quality 1080p video from a 480p source, or scaling up a social media-sized image for feature use.

A few years ago a novel and highly effective new Super Resolution method was innovated (“RAISR”, see https://arxiv.org/abs/1606.01299); this represented a major discontinuity in the field, albeit with the usual mountain of investment and work needed to take the ‘R’ (research) to ‘D’ (development).

This is exactly what Intel have done, and the resulting toolsets will be made available at no cost at the company’s Open Visual Cloud repository at the end of May.

Intel invested four years in improving the AI/ML algorithms (having created a massive ground truth library for learning), optimising to CPUs for performance and parallelisation, and then engineering the ‘applied’ tools developers need for integration (e.g. Docker containers, FFmpeg and GStreamer plug-ins). Performance will now be commercially robust.

The visual results are astonishing, and could have a major impact on the commercial potential of photographic and film/video collections needing to reach much higher resolutions or even to repair ‘blurriness’.

Next year’s event is the centennial of the first NAB Show and takes place from April 15th-19th in Las Vegas.

– Pam Fisher – Lead, IPTC Video Metadata Working Group